The Impakt Festival officially begins next Wednesday, but in the weeks prior to the event, Impakt has been hosting numerous talks, dinners and also a weekly “Movie Club,” which has been a social anchor for my time in Utrecht.

Every Tuesday, after a pizza dinner and drinks, an expert in the field of new media introduces a relatively recent film about machine intelligence, prompting questions that frame larger issues of human-machine relations in the films. An American audience might be impatient about a 20-minute talk before a movie, but in the Netherlands, the audience has been engaged. Afterwards, many linger in conversations about the very theme of the festival: Soft Machines.The films have included I, Robot, Transcendence, Her and the documentary: Game Over: Kasparov and the Machine. They vary in quality, but with the introduction of the concepts ahead of time, even Transcendence, a thoroughly lackluster film engrossed me.

Every Tuesday, after a pizza dinner and drinks, an expert in the field of new media introduces a relatively recent film about machine intelligence, prompting questions that frame larger issues of human-machine relations in the films. An American audience might be impatient about a 20-minute talk before a movie, but in the Netherlands, the audience has been engaged. Afterwards, many linger in conversations about the very theme of the festival: Soft Machines.The films have included I, Robot, Transcendence, Her and the documentary: Game Over: Kasparov and the Machine. They vary in quality, but with the introduction of the concepts ahead of time, even Transcendence, a thoroughly lackluster film engrossed me.

The underlying question that we end up debating is: can machines be intelligent? This seems to be a simple yes or no question, which cleaves any group into either a technophilic pro-Singularity or curmudgeonly Luddite camp. It’s a binary trap, like the Star Trek debates between Spock and Bones. The question is far more compelling and complex.

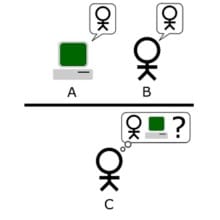

The Turing test is often cited as the starting point for this question. For those of you who are unfamiliar with this thought experiment, it was developed by British mathematician and computer scientist, Alan Turing in a 1950 paper that asked the simple question: “can machines think”.

The Turing test is often cited as the starting point for this question. For those of you who are unfamiliar with this thought experiment, it was developed by British mathematician and computer scientist, Alan Turing in a 1950 paper that asked the simple question: “can machines think”.

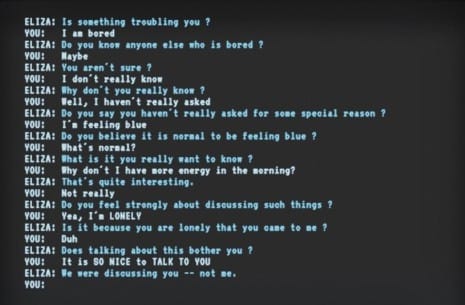

The test goes like this: suppose you have someone at a computer terminal who is conversing with an entity by typing text conversations back and forth, what we now regularly do with instant messaging. The entity on the other terminal is either a computer or a human, the identity of which is unknown to the computer user. The user can have a conversation and ask questions. If he or she cannot ascertain “human or machine” after about 5 minutes, then the machine passes the Turing test. It responds as if a human would and can effectively “think”.

In 1990, the thought experiment became a reality with the Loebner Prize. Every year, various chatbots — algorithms which converse via text with a computer user — compete to try to fool humans in a setup that replicates this exact test. Some algorithms have come close, but to date, no computer has ever successfully won the prize.

The story goes that Alan Turing was inspired by a popular party game of the era called the “Imitation Game” where a questioner would ask an interlocutor various questions. This intermediary would then relay these questions to a hidden person who would answer via handwritten notes. The job of the questioner was to try to determine the gender of the unknown person. The hidden person would provide ambiguous answers. A question of “what is your favorite shade of lipstick” could be answered by “It depends on how I feel”. The answer is in this case is a dodge as a 1950s man certainly doesn’t know the names of lipstick shades.

Both the Turing test and the Imitation Game hover around the act of deception. This technique, widely deployed in predator-prey relationships in nature, is engrained in our biological systems. In the Loebner Prize competitions, there have even been instances where the human and computer will try to play with the judges, making statements like: “Sorry I am answering this slowly, I am running low on RAM”.

It may sound odd, but the computer doesn’t really know deception. Humans do. Every day we work with subtle queues of movement around social circles, flirtation with one another, exclusion and inclusion into a group and so on. These often rely on shades of deception: we say what we don’t really mean and have other agendas than our stated goals. Politicians, business executives and others that occupy high rungs of social power know these techniques well. However, we all use them.

The artificial intelligence software that powers chatbots has evolved rapidly over the years. Natural language processing (NLP) is widely used in various software industries. I had an informative lunch the other day in Amsterdam with a colleague of mine, Bruno Jakic at Applied AI, who I met through the Affect Lab. Among other things, he is in the business of sentiment analysis, which helps, for example, determine if a large mass of tweets indicates a positive or negative emotion. Bruno shared his methodology and working systems with me.

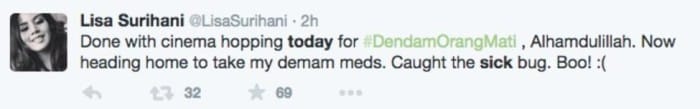

State-of-the-art sentiment analysis algorithms are generally effective, operating in the 75-85% range for identification of a “good” or “bad” feeling in a chuck of text such as a Tweet. Human consensus is in the similar range. Apparently, a group of people cannot agree on how “good” or “bad” various Twitter messages are, so machines are coning close to effective as humans on a general scale.

The NLP algorithms deploy brute force methods by crunching though millions of sentences using human-designed “classifiers” — rules to help determine how a sentence looks. For example, an emoticon like a frown-face, almost always indicates a bad feeling.

Computers can figure this out because machine perception is millions of time faster than human perception. It can run through examples, rules and more but acts on logic alone. If NLP software code generally works, where specifically does it fail?

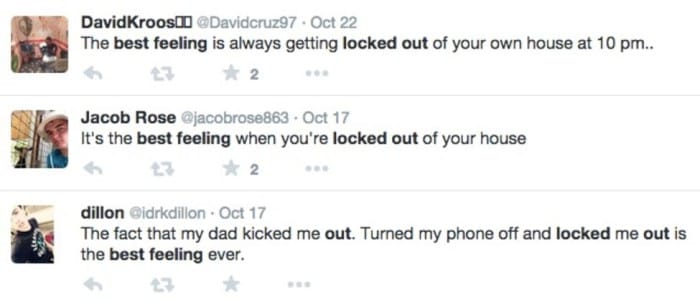

Bruno pointed out was that machines are generally incapable of figuring out if someone is being sarcastic. Humans immediately sense this by intuitive reasoning. We know, for example that getting locked out of your own house is bad. So if you write that this is a contradictory good thing, it is obviously sarcastic. The context is what our “intuition” — or emotional brain understands. It builds upon shared knowledge that we gather over many years.

The Movie Club films also tackle this issue of machine deception. At a critical moment in the film, Sonny, the main robot character in I, Robot, deceives the “bad” AI software that is attacking the humans by pretending to hold a gun to one of the main “good” characters. It then winks to Will Smith (the protagonist) to let him know that he is tricking the evil AI machine. Sonny and Will Smith then cooperate, Hollywood style with guns blazing. Of course, they prevail in the end.

The Movie Club films also tackle this issue of machine deception. At a critical moment in the film, Sonny, the main robot character in I, Robot, deceives the “bad” AI software that is attacking the humans by pretending to hold a gun to one of the main “good” characters. It then winks to Will Smith (the protagonist) to let him know that he is tricking the evil AI machine. Sonny and Will Smith then cooperate, Hollywood style with guns blazing. Of course, they prevail in the end.

Sonny possess a sophisticated Theory of Mind: an understanding of its own mental state and well as that of the other robots and Will Smith. It takes initiative and pretends to be on the side of the evil AI computer by taking an an aggressive action. Earlier in the film, Sonny learned what winking signifies. It knows that the AI doesn’t understand this and so the wink will be understood by Will Smith and not be the evil AI.

In Game Over: Kasparov and the Machine, which recasts the narrative of the Deep Blue vs.Kasparov chess matches, the Theory of Mind of the computer resurfaces. We know that Deep Blue won the chess match, which was a series of 6 chess matches in 1997. It is the infamous Game 2, which obsessed Kasparov. The computer played aggressively and more like a human than Kasparov had expected.

In Game Over: Kasparov and the Machine, which recasts the narrative of the Deep Blue vs.Kasparov chess matches, the Theory of Mind of the computer resurfaces. We know that Deep Blue won the chess match, which was a series of 6 chess matches in 1997. It is the infamous Game 2, which obsessed Kasparov. The computer played aggressively and more like a human than Kasparov had expected.

At move 45, Kasparov resigned, convinced that Deep Blue had outfoxed him that day. Deep Blue had responded in the best possible way to Kasparov’s feints earlier in the game. Chess experts later discovered that Kasparov could have easily forced a honorable draw instead of resigning the match.

The computer appeared to have made a simple error. Kasparov was baffled and obsessed. How could the algorithm have failed on a simple move, when it was so thoroughly strategic earlier in the game. It didn’t make sense.

Kasparov felt like he was tricked into resigning. What he didn’t consider was that when the algorithm didn’t have enough time — since tournament chess games are run against a clock — to find the best-ranked move, that it would choose randomly from a set of moves…much like a human would do in similar circumstances. The decision we humans make is emotional at this point. Inadvertently, Kasparov the machine deceived Kasparov.

I’m convinced that ability to act deceptively is one necessary factor for machines need to be “intelligent”. Otherwise, they are simply code-crunchers. But there are other aspects, as well, which I’m discovering and exploring during the Impakt Festival.

I will continue this line of thought on machine intelligence in future blog posts, I welcome any thoughts and comments on machine intelligence and deception. You can find me on Twitter: @kildall.